AI’s superpower is it’s ability to recognise patterns. Detecting anomalies plays a vital role in identifying deviations from what is considered ‘normal’. Anomalies can provide crucial insights into potential issues, exceptional events, or outliers within metrics. In this example we explain how we were able to apply AI to help a customer who was experiencing payment terminal malfunctions with certain payment methods.

Know the data

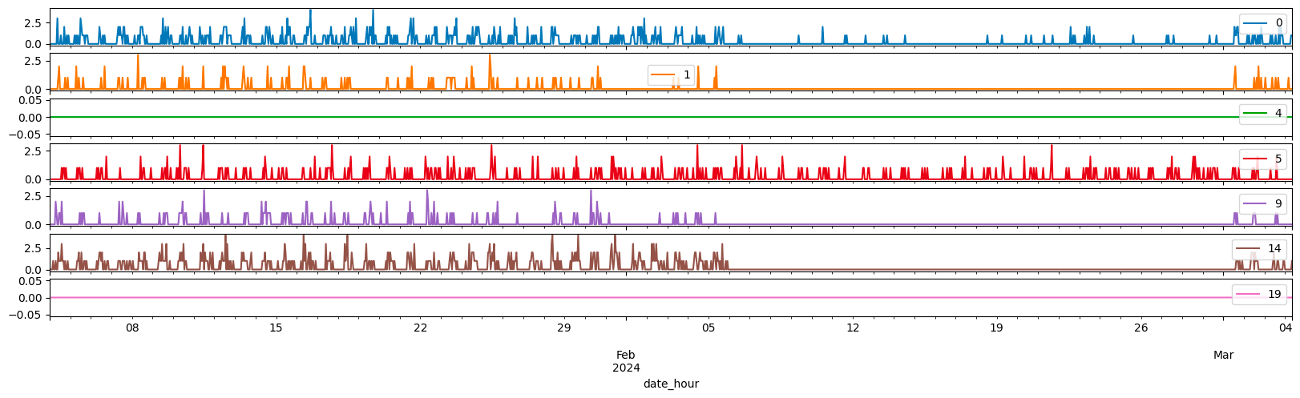

The first step of the process is to know your data. The chart below shows transactions over a set period for certain payment types, with each colour representing a different form of payment. To the naked eye, it’s quite obvious that payment type 1 (orange), 9 (purple) and 14 (brown) have an outage and that 19 (pink) has been dead for a while. What might get overlooked is payment type 0 (blue) which appears to work sporadically. What is clear, is that since March 5th transaction patterns have significantly changed.

Stats will get you some of the way

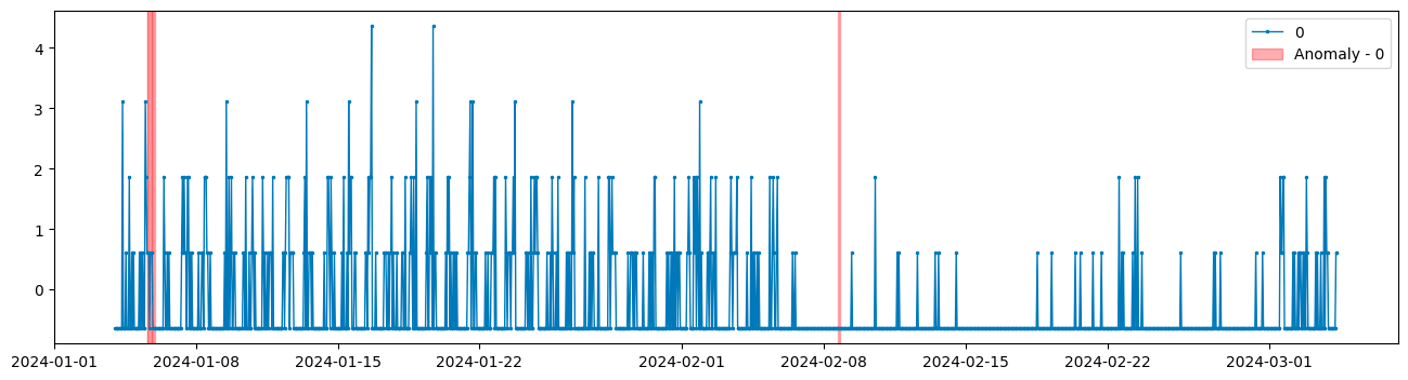

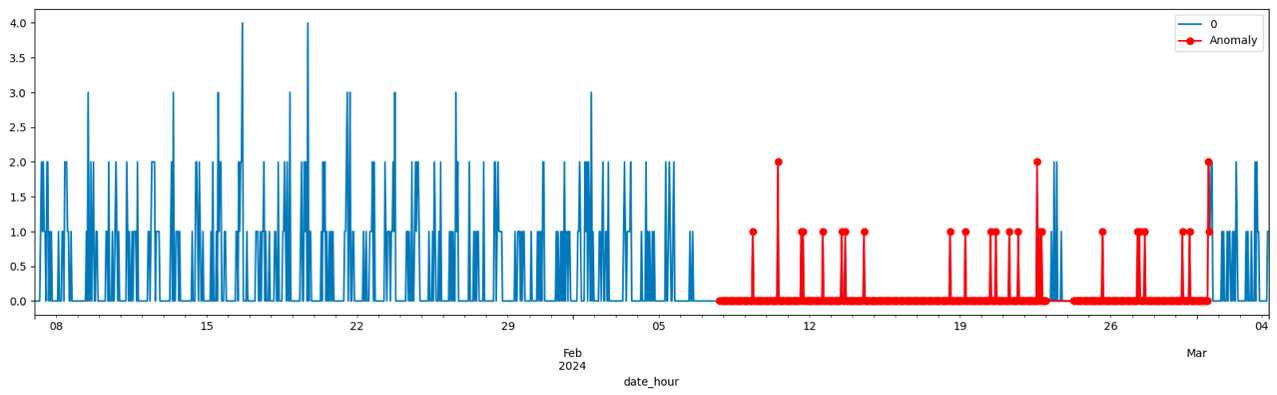

To better understand what is happening with the transactions we applied PersistAD. PersistAD identifies anomalies that persist across consecutive windows. The chart shows transactions across a 24 hour period. Whilst the system was able to detect some anomalies, it was not able to detect the complete change in transaction patterns from March 5th onward. This method also requires some fiddling on a site-by-site level. Transaction patterns on smaller sites, for example, will deviate quite significantly from larger sites as there may be be longer periods without any transactions.

Autoencoders

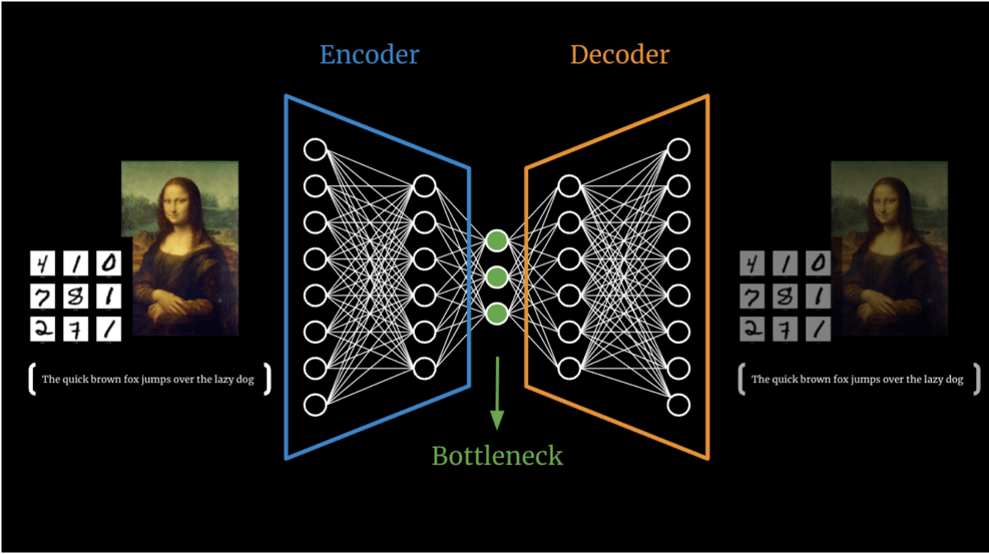

The next step was to try an autoencoder to detect anomalies in the data. An autoencoder is a type of neural network architecture designed to efficiently compress (encode) input data down to its essential features, then reconstruct (decode) the original input from this compressed representation.

An example of this from everyday life is being able to identify a song when someone is humming a melody. This is essentially what autoencoders do. They learn a representation of the input (the song) using a lot less knowledge (humming) and then decode the internal representation to approximate it back to its original input (identifying the song).

Autoencoders, like large language models (LLMs), rely on neural networks to achieve their amazing capabilities and come in a range of different configurations depending on the task you want too solve. They are often trained using unsupervised learning, which means there is no labelling of the training data, to discover hidden relationships in the input data.

Aside from anomaly detection, autoencoders can also be used for a number of other applications:

- Image Denoising – Vivino app is a wine scanner that allows users to instantly view a wine’s taste characteristics, facts, and unbiased reviews. The app was originally manually trained on clean labelled photos but is now able to quickly identify the wine you are drinking even from a noisy photo taken in a restaurant.

- Image Segmentation – Tesla use something similar in their Full Self Driving Autopilot technology to enrich the understanding of the images they are capturing from the cameras on their cars.

- Data Generation – The Neural Paint in Google’s Pixel phone allows users to removed unwanted objects from a photo or repaint a missing area.

To detect anomalies in the transaction data both the encoder and decoder were configured as one dimensional Convolutional Neural Networks and we used unsupervised training on a ‘normal’ part of the data.

Convolutional Neural Networks are the corner stone of Deep Learning – they are excellent at learning patterns and then generating their own similar patterns. Deep fakes, false images that have been digitally altered to appear real, are a perfect example of this. The technology uses Convolutional Neural Networks to learn patterns, for example a famous person’s face, and then uses this to generate similar patterns resulting in new images.

The Autoencoder dynamically learns from patterns in the data and when applying this we were able to recognise whenever payment transactions looked odd. [Imagine example above]

Fine tuning

Even though we found a solution to the customer’s problem using the autoencoder, we still needed to maximise the performance of the AI much like tuning the aero dynamics of an F1 car from track to track.

We fine-tuned the parameters within the specific use case – selecting the most feasible topology for both the encoder and the decoder (yes they can be different); how many neurons or nodes in each layer; selecting a loss function to measure the optimization; and choosing the size of the bottledeck such that it’s doesn’t overfit the purpose (just learns all the examples) or underfit (everything is the same) but still captures the most vital information.